Definition

Evidence-Based Medicine is the integration of best research evidence with clinical expertise and patient values.1

The EBP Cycle

Assess

Ask

Acquire

Appraise

Apply

Assess

1 (Sackett DL, Straus SE, Richardson WS, et al. Evidence-based medicine: how to practice and teach EBM. 2nd ed. Edinburgh: Churchill Livingstone, 2000.)

Overviews & Introductions

The 6s Pyramid

The 6s Evidence Hierarchy demonstrates the level of evidence within the different types of publications. As information travels up the pyramid, it becomes more synthesized and the evidence is stronger. The higher levels of the pyramid include information that has been disseminated by several experts, whereas the lower levels of the pyramid have yet to go through an extensive review.

HLWiki Canada. (2016). Evidence-based Medicine - history. Retrieved from http://hlwiki.slais.ubc.ca/index.php/Evidence-based_medicine_-_history.

Systematic Reviews

Point of Care

Practice Guidelines

Asking an Answerable Question

Using PICO TO Structure a Clinical Question

The PICO model offers one way to construct your question:

| P = Patient or population | Identify the patient groups and medical problem central to your question |

| I = Intervention | Which intervention is being studied? |

| C = Comparative Intervention | Which comparison interventions are being considered (if any)? |

| O = Outcome | What would you like to measure or improve? e.g. reduced mortality, improved quality of life |

Let's look at an example:

Situation: One of your area's goals is to reduce occurrence and improve treatment of bed sores. Dynamic bed support surfaces are being discussed.

This can be written into PICO categories:

P = Patients with pressure ulcers

I = Dynamic bed support surfaces

C = Static bed support surfaces

O = fewer pressure ulcers

From this we can state the answerable research question as: "For patients with pressure ulcers do dynamic bed support surfaces, when compared with static bed support surfaces, result in fewer pressure ulcers?"

What Study Designs Answer Which Questions?

Appraising Therapy Studies

1. VALIDITY

Think FRISBE

|

F patient Follow-up |

|

|

R Randomization |

|

|

I Intention to treat |

|

|

S Similar baseline characteristics |

|

|

B Blinding |

|

|

E Equal treatment |

|

2. CLINICAL IMPORTANCE

ARR Absolute Risk Reduction

The difference between the risk of an event without the treatment and the risk of an event with the treatment.

EER-CER=ARR

CER Control Event Rate

The risk of an event happening in the control / non-treatment group.

EER Experimental Event Rate

The risk of an event happening in the experimental / treatment group.

NNT Number Needed to Treat

The number of patients that would need to be treated in order to prevent one event.

1/ARR=NNT

RR Relative Risk / Risk Ratio

The ratio of the difference between the risk of an event without the treatment and the risk of an event with the treatment: 50% greater risk, 5 times the risk, etc.

EER/CER=RR

RRR Relative Risk Reduction

The ratio of the number fewer events in the experimental / treatment group compared to the control group

(EER-CER)/CER=RRR

Appraising Diagnosis Studies

1. VALIDITY

2. CLINICAL IMPORTANCE

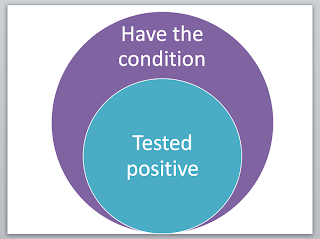

Sensitivity (Sn)

The percentage or proportion of people that have the condition AND test positive with the experimental test.

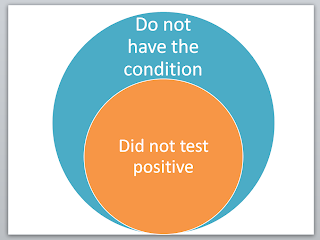

Specificity (Sp)

The percentage or proportion of people that DO NOT have the condition AND DO NOT test positive with the experimental test.

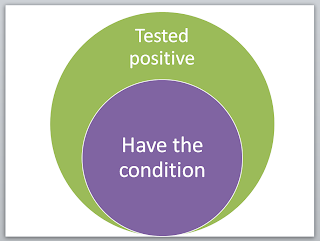

Positive Predictive Value (PPV)

The percentage or proportion of people that test positive with the experimental test who have the condition

Negative Predictor Value (NPV)

The percentage or proportion of people that do not test positive and do not have the condition.

Appraising Systematic Reviews

VALIDITY